THE *CERTAINTY PRINCIPLE*! By Gong Tienzen

From :https://tienzengong.wordpress.com/2014/12/27/the-certainty-principle/

Sean Carroll *was* a diehard {Everett (many-worlds) formulation} supporter. Yet, on December 16, 2014, he wrote: “Now I have graduated from being a casual adherent [Everett (many-worlds) formulation] to a slightly more serious one. (http://www.preposterousuniverse.com/blog/2014/12/16/guest-post-chip-sebens-on-the-many-interacting-worlds-approach-to-quantum-mechanics/ )”. This seems a major ‘retreat’ from his previous diehard position, as the truth will always prevail. No one can be diehard about a wrong position.

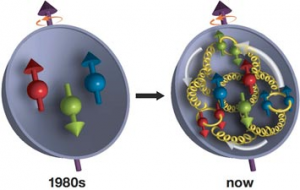

The *Uncertainty Principle (delta P x delta S >= ħ)* is now over 100 years old, and it helped the great advancement in physics for formulating the Standard Model in 1970s. However, almost 50 years since then, there is no (absolutely zero) ‘theoretical’ advancement in the ‘mainstream’ physics (as, SUSY, M-string theory and multiverse are all failures thus far). Today, this UP (Uncertainty Principle) stands with five points.

One, UP is about *uncertainty* which is an intrinsic attribute of nature (cannot be removed by better measurement). It is also *fundamental*, not an emergent. That is, it is an *empirical*, not *derived*.

Two, in addition to the *uncertainty*, UP has a very bizarre attribute (the superposition); that is, a *particle (although wave-like)* can be at *different* location at the same time. Yet, this superposition is not *observed* at all. Thus, it gives rise to many different *interpretations* (such as the dead/live cat and many-words formulation, etc.).

Three, with UP, Quantum Field Theory (QFT) was formulated, and its application on Standard Model (SM) is a great success. But its further applications in SUSY, M-string theory and multiverse are thus far total failures.

Four, SM is a 100% phenomenological model as it has many *free parameters* which need some theoretical bases. After 50 years long trying by thousands top physicists, the *mainstream* physics has failed on this task. One easy way out for this failure is to claim that those ‘free parameters’ are *happenstances* which has no theoretical *base*. Again, UP became the mechanism which produced many (zillions) *bubbles* at the ‘starting point’, and these bubbles evolve with many ‘different sets’ of free parameters. Thus, the free parameters of ‘this’ universe need not to have any theoretical base (cannot be *derived*).

Five, UP is obviously not compatible with the General Relativity (GR, a *gravity* theory). The *quantum gravity* (an attempt of unifying these two) is thus far a total failure.

These five points are where we stand on this UP, and there is no sign of any kind for any breaking through. In fact, there will be no breaking through at current points, as most of those points are wrong. There is no chance of getting it right with many wrongs.

The UP equation (delta P x delta S >= ħ) is of course correct, as it is an empirical formula. The problem is at its *interpretation*.

There is a Universe which we are living in and observing. This universe has a *structure* which is *stable*, that is, many things about this universe are *Certain*.

Point 1, there is an *Event Horizon (EH)* for ‘this’ universe. Anything beyond the EH can only be speculated. So, we should FIRST discuss the *framework (structure)* of this universe inside of the EH.

Point 2, the EH is constructed mainly by *photon* which is the production of Electromagnetic interaction (governed by a nature constant, Alpha). Alpha is a *pure number* (dimensionless), that is, it could be a true constant. Is it truly a true constant? There is no reason to get into anything is not 100% certain. I will thus define an EC (Effective Certainty): anything is not changing during the life-time of this universe is an EC-constant. Furthermore, Alpha consists of three other nature constants {c (light speed), ħ (Planck constant), e (electric charge)} which are also the EC-constants.

e (electric charge) = (ħ c)^(1/2).

{ħ = delta P x delta S} is not about *uncertainty* but is a *viewing window* (seehttp://www.prequark.org/Constant.htm ). Then, the largest *viewing window* is (ħ c). This *certainty (Event Horizon, the ‘causal world’)* is totally defined by { ħ = delta P x delta S}, as EH = (ħ c) T, T is the life-span-of-this- universe. Thus, EH (event horizon) is totally certain.

Point 3, if this universe is built with some fixed measuring rulers, its structure must be CERTAIN. For the EH, it is built with two EC measuring rulers (c, ħ), and these two rulers are locked by another EH constant e {electric charge = (ħ x c)^ (1/2)}. Then,

{c, ħ, e}, the *measuring rulers*, are further locked by an EC-constant (the Alpha, a pure number, dimensionless). Thus, this EH (Event Horizon) is very much constructed with *certainty*, locked by two safety locks.

Point 4, going beyond the EH (event horizon), why is there something rather than nothing? The answer is that the Nothingness is the essence while the something is the emergent, see https://medium.com/@Tienzen/here-is-the-correct-answer-5d1a392f700#.x9via6kfe . This {nothing to something transformation} is 100% certain. Its consequences are also 100% certain.

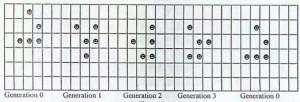

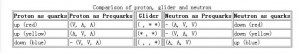

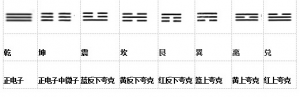

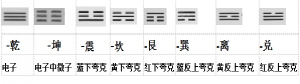

One, it gives rise to 48 SM (Standard Model) fermions and 16 energy spaces (dark energy), seehttp://prebabel.blogspot.com/2012/04/48-exact-number-for-number-of.html

Two, it gives rise to nature constants (Cabibbo and Weinberg angles), seehttp://prebabel.blogspot.com/2011/10/theoretical-calculation-of-cabibbo-and.html

Three, the calculation for Alpha is 100% certain.

Beta = 1/alpha = 64 (1 + first order mixing + sum of the higher order mixing)

= 64 (1 + 1/Cos A (2) + .00065737 + …)

= 137.0359 …

A (2) is the Weinberg angle, A (2) = 28.743 degree

The sum of the higher order mixing = 2(1/48) [(1/64) + (1/2) (1/64) ^2 + …+(1/n)(1/64)^n +…]

= .00065737 + …

Four, the UP equation {delta P x delta S >= ħ} can be precisely derived.

The few examples above show that this universe (its EH at least) is governed by *certainty* while that certainty is based on the equation {ħ = delta P x delta S}. But, can this equation be derived, from something more fundamental? The answer is a big YES.

This universe is expanding (accelerating in fact). But, where does it expand into? It expands from *HERE* to *NEXT*, and this is powered by the so called the *dark energy* (see http://prebabel.blogspot.com/2013/11/why-does-dark-energy-make-universe.html ) which is described by a unified force equation.

F (unified force) = K ħ / (delta T * delta S), K the coupling constant.

Then, Delta P = F * Delta T = K ħ/ Delta S

So, delta P * delta S = Kħ >= ħ; now, this equation is *derived*, no longer as only an empirical formula. That is, it is not fundamental, as the unified force (UF) equation is more fundamental. However, this {delta P * delta S >= ħ} should be named as *Certainty-Principle*. Also see http://prebabel.blogspot.com/2013/11/why-does-dark-energy-make-universe.html

Five, the Planck CMB data on dark energy and dark mass can be PRECISELY calculated with 100% certainty,

Recently, the Planck data (dark energy = 69.2; dark matter = 25.8; and visible matter = 4.82) describes the structure of this universe (at least its Event Horizon). With only *numbers* (not variables), it again show that this universe is *certain*. Yet, all the talking is in vain. This {Certainty-Model} must account for these numbers.

In this *Certainty-Model*, there is a *mass-charge*; that is, all particles which carry rest mass carry that mass-charge which is a *constant* (the same as e, the electric charge, being a constant). Mass-charge defines the *structure*, not about the particle. In the mass-kingdom, it is divided into 48 dominions which carry the same mass. So, neutrino and top quark should have the same *mass* (mass-charge) while their *apparent* mass difference is caused by a name-tag (or pimple) principle.

Now, we can calculate this visible/dark mass-ratio.

One, among 48 mass dominions, only 7 of them are visible.

Two, some of the dark dominions do become visible with a ratio W.

So, the d/v (dark/visible ratio) = [41 (100 – W) % / 7]

When, W = 9 % (according to the AMS2 data), d/v = 5.33

In this *Certainty-Model*, the space, time and mass form an *iceberg model*.

Space = X

Time = Y

Total mass (universe) = Z

And X = Y = Z

In an iceberg model (ice, ocean, sky), Z is ice while the (X + Y) is the ocean and sky, the energy ocean (or the dark energy). Yet, the ice (Z) will melt into the ocean (X + Y) with a ratio W.

When W = 9%,

[(Z – V) x (100 – W) %] /5.33 = V, V is visible mass of this universe.

[(33.33 –V) x .91]/5.33 = V

V= 5.69048 / 1.17073 = 4.86 (while the Planck data is 4.82),

D (Dark mass) = [(Z – 4.86) x (100 – W) %] = [(33.33 -4.86) x .91] = 25.90 (while the Planck data = 25.8)

So, the total dark energy = (X + Y) + [(Z – 4.86) x W %)] = 66.66 + (28.47 x 0.09) = 69.22 (while the Planck data is 69.2)

Except the ‘W’ is a free parameter, the above calculation is *purely* theoretical, and it matches the data to an amazing degree. Thus far, the *Certainty-Model (fixed framework)* prevails. Also see https://tienzengong.wordpress.com/2015/04/22/dark-energydark-mass-the-silent-truth/

Six, how about the part of the universe which goes beyond the EH (event horizon) and how about the quantum entanglement?

The m (mass) = (ħ /c)(2pi/L), L is the wavelength (lambda) of a particle. As the L (lambda) is an attribute of a particle, m (mass) is also an attribute (not a universal constant) of a particle (see http://prebabel.blogspot.com/2012/04/origin-of-mass-gateway-to-final-physics.html ). Again, mass is also defined by ħ. While e (electric charge with photon) measure this universe from *without* (times c, the light speed), the mass measure this universe from *within* (divided by c). What are these means?

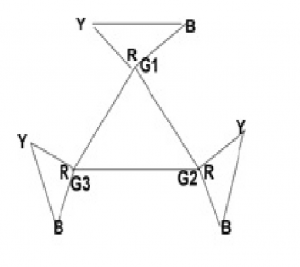

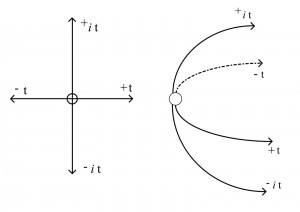

Figure 1: the topology of this universe

The center of the figure 1 is the North Pole, the outer circle is the South Pole {the entire circle (with infinite geometric points) is just a single point}. When we split a quantum state at North Pole and send them to point A and B. The distance between AB can be viewed as 2r (radius of the circle). The CAUSAL transmission between the two points takes time t = 2r/C. Yet, in reality (nature, with quantum entanglement), the distance between AB is zero (being the same point); so the real transmission takes t’ = 0/C, the instantaneous, the spooky action.

For two particles C and D, their causal distance CD is measured with photon, and the causal transmission time is t = CD/C. On the other hand, the gravity arises from each particle (C, D or E) bouncing between the real and the ghost point, and their distances to the ghost point (such as the South Pole) are the same regardless of their causal distances that CD > CE. That is, their gravity interaction strength is measured with the causal distance (1/r^2) while their transmission rate are all the same (for CD, CE or DE), as they are linked through the ghost point.

Quantum entanglement is also linked via this ghost point, and thus their transmission rate is also instantaneously.

This Real/Ghost symmetry is the base (source) for all physics laws.

First, giving rise to SM particle zoo.

Second, providing the calculation for nature constants.

Third, giving rise to quantum spin (1/2 ħ).

Fourth, giving rise to gravity and dark energy via this quantum, see https://medium.com/@Tienzen/quantum-gravity-mystery-no-more-1d1bf39ad255#.qs69g6a58

Fifth, the instantaneity is the FACTs for both gravity and quantum entanglement.

Of course, I should still address 1) the *interpretation* issue which is mainly powered by the double-slit experiment, and 2) the supersymmetry issue.

Double-slit pattern is the result of waves. For sound wave, its carrying medium is air particles. For water wave, its carrying medium is water molecules. For the quantum wave, its carrying medium is the ‘spacetime’ itself. That is, the particle (such as electron, proton, etc.) are not waves themselves as they are as rigid as any steel bullet. Their wave-patterns are produced by their carrier (the spacetime, the eternal wave). When surfers go through a nearby double-slit surfing contest at beach, the landing pattern will just like a wave-pattern. Please see (http://putnamphil.blogspot.com/2014/05/the-measurement-problem-in-qm.html?showComment=1402087925047#c6965420756934878751 andhttps://tienzengong.wordpress.com/2014/06/07/measuring-the-reality/ ) for more detailed discussion. Thus, the schrodinger’s cat anxiety is not needed, and the ‘many world interpretation’ is totally nonsense.

Last but not least, I should discuss the *supersymmetry* issue. Yes, there is a supersymmetry above the SM symmetry. Yes, there is a symmetry-partner for this SM universe. This again is the result of ħ, the quantum *spin*. For all fermions (with ½ ħ), they see *two* copies of universe. And, that second copy is the supersymmetry-partner of this SM universe (see https://tienzengong.wordpress.com/2014/02/16/visualizing-the-quantum-spin/ for details). That is, the ħ is the ‘source’ of supersymmetry which is the ‘base’ for gravity. Gravity is the force which moves the entire universe from “HERE” to “NEXT” (see http://www.prequark.org/Gravity.htm ), and ħ is the ‘source’ of the gravity. Quantum gravity is now complete.

The {Nothing to Something transformation} is precise and certain.

The measuring rulers are double locked, with total certainty.

The calculations of nature constants and Planck CMB data are precise and totally certain.

The SM particle rising rule is totally precise and certain.

The expansion of the universe is totally precise and certain.

Now, the conclusion here is that the equation {ħ = delta P x delta S} is all about *certainty*, not about uncertainty.

PS: In addition to the above points, the quantum algebra can be a very powerful point on this issue, seehttp://prebabel.blogspot.com/2012/09/quantum-algebra-and-axiomatic-physics.html .

Furthermore, many prominent physicists are now coming over to my camp, such as Steven Weinberg (Nobel Laureate), see http://prebabel.blogspot.com/2013/01/welcome-to-camp-of-truth-nobel-laureate.html and Gerard ’t Hooft (Nobel Laureate) who just showed that quantum behavior could be described with cellular automaton (totally deterministic), see http://prebabel.blogspot.com/2012/08/quantum-behavior-vs-cellular-automaton.html .

The last but not the least, the so-called black-hole information paradox.

In the mid-1970s, Stephen Hawking claimed that block hole will destroy all information about what had fallen in, in accordance to the UP (uncertainty principle). And, this gives rise to the black-hole information paradox. Yet, in the last year (2015, 40 years after his claim), Hawking claimed the opposite, the information is not lost. Andrew Strominger (physics professor at Harvard University) explained the facts which forced Hawking to change his heart.

Fact one, without deterministic, the laws of nature cannot manifest and stay constancy, let alone to govern this universe. That is, even if UP were truly about the uncertainty, UP ITSELF must be certain (determined). If black hole destroys information, then this universe is not deterministic; this is a theoretical tragedy and empirical untrue.

Fact two, if determinism breaks down in the big black hole in accordance to the UP, there should be little black holes popping in and out of the vacuum too under UP. Again, this is empirically untrue, and it is now ruled out by the recent LHC data.

Fact three, in order to say that a symmetry implies a conservation law, you need determinism. Otherwise symmetries only imply conservation laws on the average. Again, this ‘average’ is empirically untrue. The conservation laws are precise, not just in average.

These facts are pointed out by Strominger and Hawking (see http://blogs.scientificamerican.com/dark-star-diaries/stephen-hawking-s-new-black-hole-paper-translated-an-interview-with-co-author-andrew-strominger/ ). After being wrong for over 40 years, Hawking has finally changed his heart exactly one year after I published this “Certainty Principle” article.

李小坚 龚天任

李小坚 龚天任