Natural is moving nicely minute by minute for the past 14 billion years and is playing its predetermined dance to its predetermined destiny with grace and joy.

On the contrary, the human mainstream physics is now in a hellfire nightmare after the discovery of a new boson in 2012. Is it suddenly falling into this hellfire nightmare unexpectedly? Or, were many hellfire demons already plagued the mainstream physics since the beginning 100 years ago? Logically, the latter must be the case. That is, the cause for the nightmare today can be traced out from its history.

The brief history

One, in (1925 – 1927), Copenhagen doctrine DECLARED that ‘quantum uncertainty’ is an intrinsic attribute of nature, and it cannot be removed by improvement of measurement in principle, and this led to the ‘measurement mystery’.

Soon, Schrödinger came up a Cat-riddle, and it CREATED the ‘superposition mystery’, the omnipresent of the ‘Quantum God’.

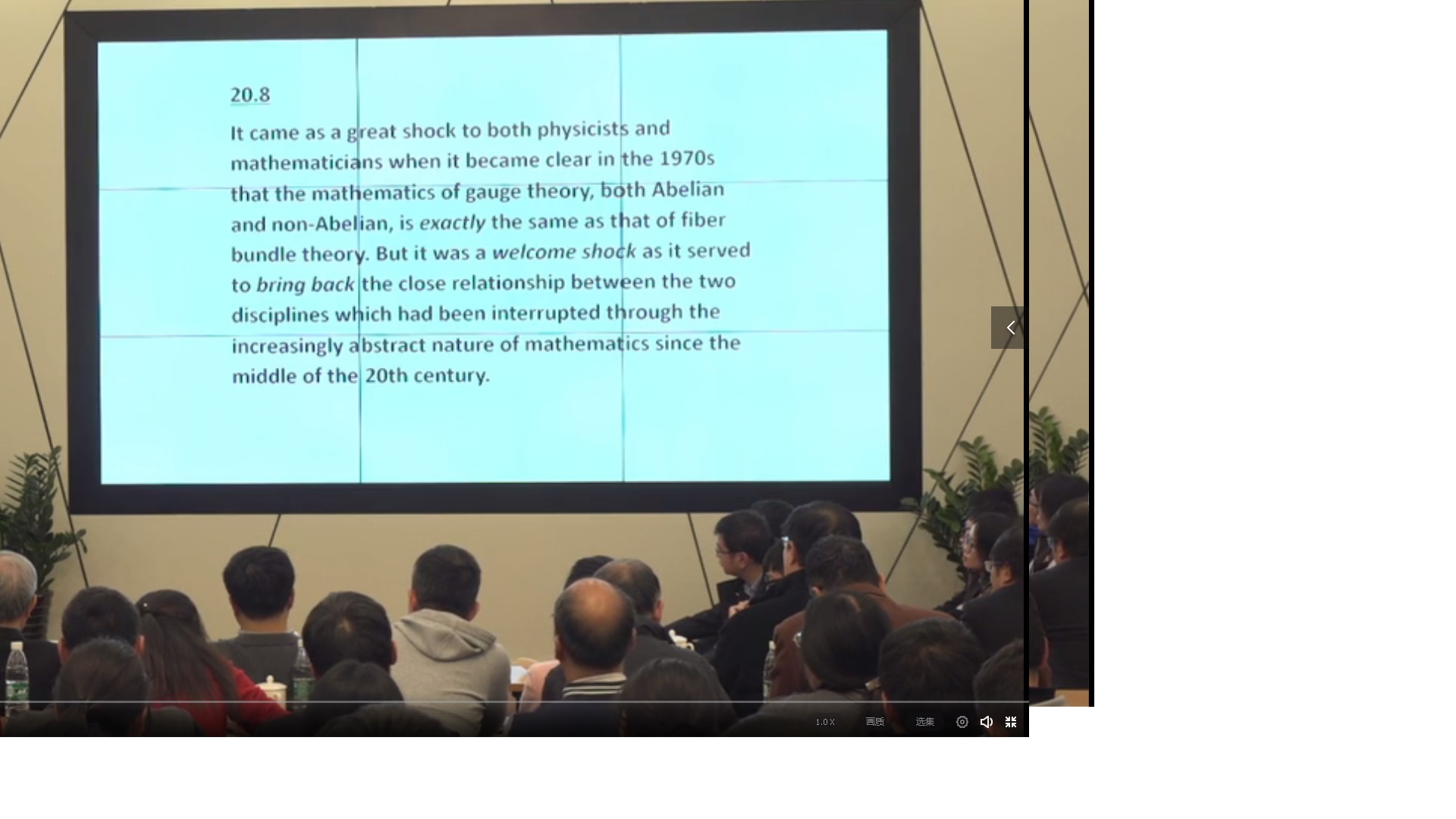

Two, in early 1954, a general gauge symmetry theory was developed by Chen Ning Yang and Robert Mills. Then, in the first part of 1960s, Murray Gell-Mann discovered the “Eightfold Way representation” from the experimental data. The Yang-Mills theory is a mathematic beautiful tool to describe some symmetries while the ‘Eightfold way’ is obviously encompassing some beautiful symmetry. However, the Yang–Mills field must be massless in order to maintain gauge invariance.

Three, in order for the Yang-Mills gauge to make contact to the real world (the Eightfold Way), it must be spontaneously broken. In 1964, Higgs and et al came up a ‘tar-lake like field’ (the Higgs mechanism) to break the SU gauge spontaneously.

Four, in 1967, Steven Weinberg and others combined a SU (2) gauge (a special Yang-Mills gauge) and the Higgs mechanism to construct the EWT (Electroweak Theory). And, this EWT works beautifully for a two quark model (with up and down quarks).

Five, in the November Revolution of 1974, Samuel Ting discovered Charm quark via the J/ψ meson; the original two quark model was thus expanded as a four quark model.

Six, in 1973, Maskawa and Kobayashi introduced the “CP Violation in the Renormalizable Theory of Weak Interaction”. Together with the idea of Cabibbo angle (θc), introduced by Nicola Cabibbo in 1963, the ‘Cabibbo–Kobayashi–Maskawa matrix’ was constructed. As this CKM matrix demands AT LEAST ‘3 generations of quarks’, a six quark model was constructed, the SM (Standard Model). The SM further predicts the weak-current (Ws) and neutral current (Z). tau (τ) lepton was discovered in 1975.

Seven, in 1983, the Ws was discovered, and Z soon after. Then, top quark was finally discovered in 1995.

At this point, the SM is basically confirmed. However, the Higgs mechanism also predicted a field boson. As the Higgs mechanism is the KEY cornerstone for SM, it (the SM) will not be complete if the Higgs field boson is not discovered.

The brief history of BSMs

With the great success of SM, a few BSMs (beyond standard model) quickly emerged.

One, the GUT (grand Unified Theory), with a higher symmetry; {SU (5), SU (3) x SU (2) x U (1); at about 10^16 Gev energy scale}. This work was mainly done by Glashow in 1974. The key prediction of GUT is the proton decay. From the early 1980s, a major effort was launched to detect the proton decay. But, the proton decay’s half-life is now firmly set as over 10 ^ 33 years, much longer than the life time of this universe, To date, all attempts to observe new phenomena predicted by GUTs (like proton decay or the existence of magnetic monopoles) have failed. With these results, Glashow was basically going into hibernation, while hoping that ‘sterile neutrino’ come to his rescue.

Two, the Preon model (done by Abdus Salam) which was expanded as Rishons model (mainly done by Haim Harari). It has sub-quarks (T, V): {T (Tohu which means “unformed” in Hebrew Genesis) and V ( Vohu which means “void” in Hebrew Genesis)}.

Rishons (T or V) carry hypercolor to reproduce the quark color, but this set up renders the model non-renormalizable. So, it was almost abandoned on day one.

Three, the M-string theory began as a bosonic string theory. In order to produce fermions, it must incorporate with the idea of SUSY. That is, M-string theory and SUSY must be Dicephalic parapagus twins.

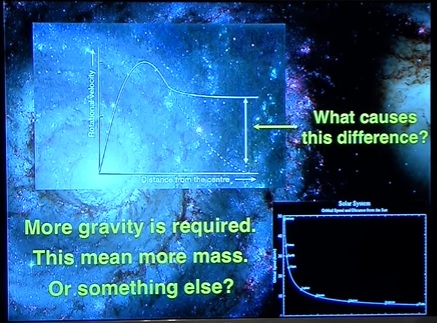

In the 1960s–1970s, Vera Rubin and Kent Ford had confirmed the existence of dark mass (not dark matter). SUSY was claimed as the best candidate to provide this dark mass. Thus, M-string theory dominates the BSM for the past 40 years.

The awakening of the demons

In 2012, a Higgs boson-like particle was discovered, with a measured mass = 125.26 Gev which is trillions and trillions smaller than the expected value.

The only way out for this predicament is by having a hidden massive partner to cancel (balance) out its huge mass. This massive partner can be a SUSY particle or a twin-Higgs. By March 2017, no twin-Higgs nor any SUSY were discovered under two (2) Tev range. Even if SUSY were existing in a higher energy sphere, it (SUSY) is no longer a solution for this Higgs-naturalness issue.

Furthermore, the b/b-bar should account for over 60% decaying channel for Higgs boson. But by now (November 2017), this channel is still not confirmed. The best number was 4.5 sigma from a report a year ago, which is not enough to make a confirmation. Most importantly, even if the channel were confirmed, it cannot meet this 60% mark.

Thus, many physicists now are open the possibility that this 2012-boson might not be the Higgs boson per se.

Yet, this Higgs demon does not stop its dance with the above issues.

The neutrino’s mass by definition cannot be accounted by Higgs mechanism, as a tar-lake like field to slow down the massless particle to gain an apparent mass, as neutrinos do not slow down in the Higgs field at all. Thus, neutrinos must be Majorana fermions.

Yet, the Majorana angel has never been observed.

One, by definition, Majorana particle must be its own antiparticle. But, many data now show that neutrino is different from its antiparticle.

Two, Majorana neutrino should induce the ‘neutrinoless double beta decay’, but its half-life is now set as over 10 ^ 25 years, much longer than the lifetime of this universe.

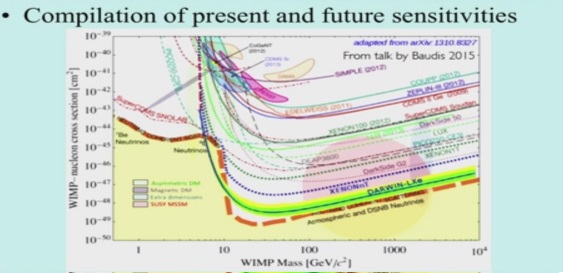

Three, by definition again, Majorana particle’s mass must come from ‘Sea-saw’ mechanism, that is, balanced by a massive partner, such as sterile neutrino or else (SUSY or whatnot). But, ‘sterile neutrino’ is now almost completely ruled out by many data (IceCube, etc.)

Four, the most recent analysis of the ‘Big Bang Nucleosynthesis’ fits well if the neutrino is a Dirac fermion (without a massive partner). If the neutrino is viewed as Majorana particle (with a hidden massive partner), ‘the Big Bang Nucleosynthesis’ can no longer fit the observation data.

Without a Majorana neutrino, the Higgs mechanism is DEAD. With a dead Higgs mechanism, SM is then fundamentally wrong as a correct model, although it is an effective theory.

This Higgs demon is now killing the SM, pushing the mainstream physics into the hellfire dungeon.

Of course, Weinberg and many prominent physicists still hope a rescue from one of the BSMs, especially from the M-string theory. But, SUSY (a major component of M-string) is now totally ruled out as an EFFECTIVE rescue. And, many most prominent String-theorists are now abandoning the M-string theory, see Steven Weinberg video presentation for ‘Int’l Centre for Theoretical Physics’ on Oct 17, 2017, at 1:32 (one hour and 32 minutes mark. Video is available at https://www.youtube.com/watch?v=mX2R8-nJhLQ . A brief quote for his saying is available at http://www.math.columbia.edu/~woit/wordpress/?p=9657

The rescuing angels

While the theoretical physics is falling into the hellfire dungeon step by step, the experimental physics angels are descending on Earth with sincerity and kindness.

One, dark mass (not dark matter) was firmly confirmed by 1970s.

Two, acceleration of the expansion of universe was discovered in 1997.

Three, a good estimation of CC (Cosmology Constant) ~ 3×10−122 was reached in 2000s.

Four, a new boson with 125.26 Gev mass was discovered in 2012.

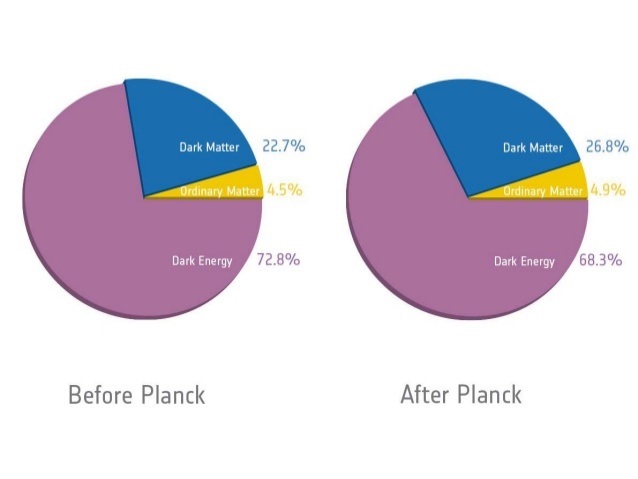

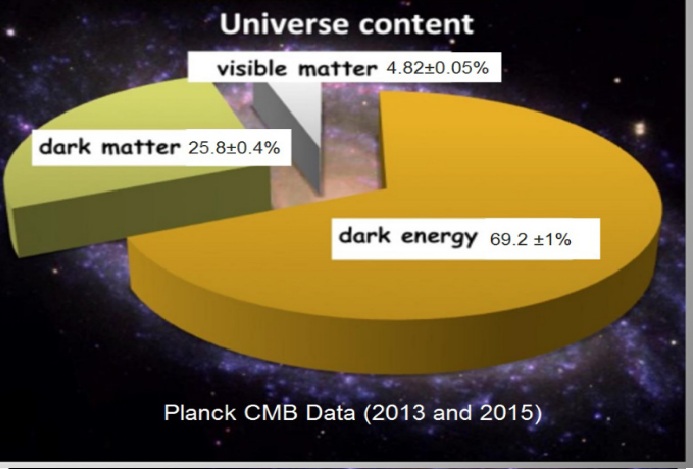

Five, Planck CMB data (2013 and 2015) provided the followings:

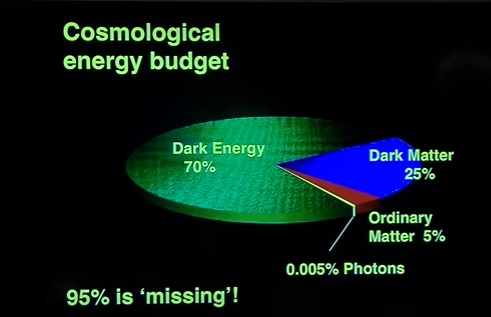

(dark energy = 69.2; dark matter = 25.8; and visible matter = 4.82)

Neff = 3.04 +/- …

Hubble Constant: H0 (early universe) = 66.93 ± 0.62 km s−1 Mpc−1 (by Using ΛCDM with Neff = 3)

These were further supported by ‘Dark Energy Survey”.

Six, the Local Value of the Hubble Constant: H0 (now, later universe) = 73.24 ± 1.74 km s−1 Mpc−1. The difference between this measurement and the Planck CMB data show a dark flow rate, w = 9%.

Seven, the LIGO twin-neutron stars coalescing ruled out most of the MOND models in October 2017.

Eight, there is no difference between matter and its antimatter in addition to being having different electric charges.

The failed Inter-Universes Escape

Under a total siege by the data angels, the Higgs mechanism led army planed an ‘Inter-Universes Escape’. Its war plan was very simple, with two tactics.

One, blind its own eyes and yelling super loud: {We are the only game in town.} For this, they organized a Munich conference: {Why Trust a Theory? Reconsidering Scientific Methodology in Light of Modern Physics, on 7-9 December, 2015, see http://www.whytrustatheory2015.philosophie.uni-muenchen.de/index.html }.

Two, INVENTING almost unlimited ghost universes by using the dominant cosmology theory, the ‘inflation cosmology’.

“Inflation” was a reverse-engineering work for resolving some cosmology observations, such as the flatness, horizon and homogeneous cosmologic facts. As a reverse-engineering, it (inflation) of course fits almost all the old data and many NEW observations. But, almost all reverse-engineering are only constrained by the THEN observed data while without any ‘guiding principle’.

That is, the ‘initial condition’ of the ‘inflation’ cannot be specified or determined. This guidance-less fact allows unlimited ‘inflation models’ to be invented. Of course, it leads to ‘eternal inflation’, having unlimited bubble-universes.

At the same time, the M-string theory also reached its final destination, the ‘String Landscape’, having also unlimited string vacua, again for unlimited bubble-universes (the Multiverse). That is,

“Eternal inflation” = ‘string landscape’ = multiverse

Now, there is a CONVERGANCE coming from two independent pathways, and this could be a great justification for its validity.

With the super weapon of Multiverse, ‘the Higgs mechanism led army’ is no longer besieged by the angel of facts. Those facts (nature constants, etc.) of this universe is just a random happenstance, and even Nature does not know how to calculate them.

The only way to kill this Multiverse escape is by showing:

One, ALL the angel facts of THIS universe can be calculated.

Two, ALL the angel facts of THIS universe is bubble-independent, see http://prebabel.blogspot.com/2013/10/multiverse-bubbles-are-now-all-burst-by.html .

See, https://tienzengong.wordpress.com/2015/04/22/dark-energydark-mass-the-silent-truth/

See https://tienzengong.wordpress.com/2016/04/24/entropy-quantum-gravity-cosmology-constant/

About the Higgs: see, https://www.linkedin.com/pulse/before-lhc-run-2-begins-enough-jeh-tween-gong

More discussions on M-string theory is available at https://tienzengong.wordpress.com/2016/09/11/the-era-of-hope-or-total-bullcrap/ .

The Arch-Demons

In addition to rule out the Multiverse nonsense, there are some other major issues:

One, baryongenesis

Two, the dark energy/dark mass

Three, the gravity/spacetime

Four, is ‘Quantum-ness’ fundamental? (Including its measurement and superposition issues).

In G-theory, the ‘quantum-ness’ is not fundamental but emerges from the dark energy, see http://prebabel.blogspot.com/2013/11/why-does-dark-energy-make-universe.html .

Furthermore, the G-theory universe is all about ‘computation’, that is, there must be a computing device in the laws of physics. And, of course, there is. In G-theory, both proton and neutron are the base of Turing computer, see http://www.prequark.org/Biolife.htm .

These two points show that the ‘quantum-ness’ is not about ‘uncertainty’ but is all about the ‘Cosmo-certainty’, see https://tienzengong.wordpress.com/2014/12/27/the-certainty-principle/ . That is, the Copenhagen doctrine is in fact one of the Arch-Demon.

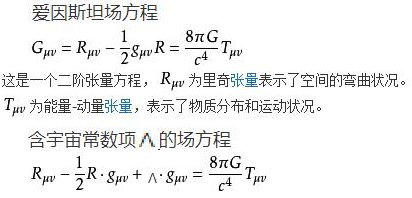

In addition to ‘computation’, THIS (not other-verse) universe is all about energy and mass. So, the Structure Function of THIS universe can be defined as:

S (universe) = S (energy, mass)

= S (dark energy, dark mass, visible relativistic mass/energy)

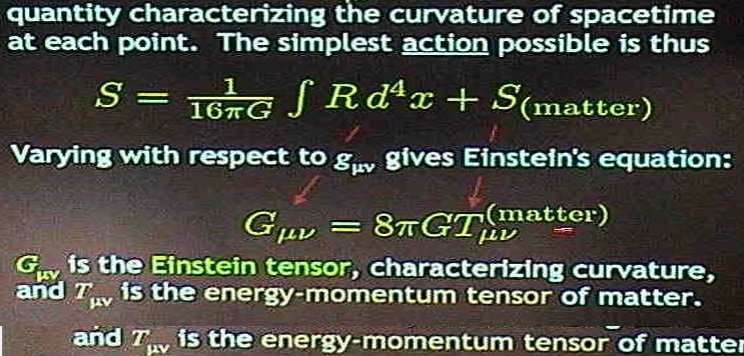

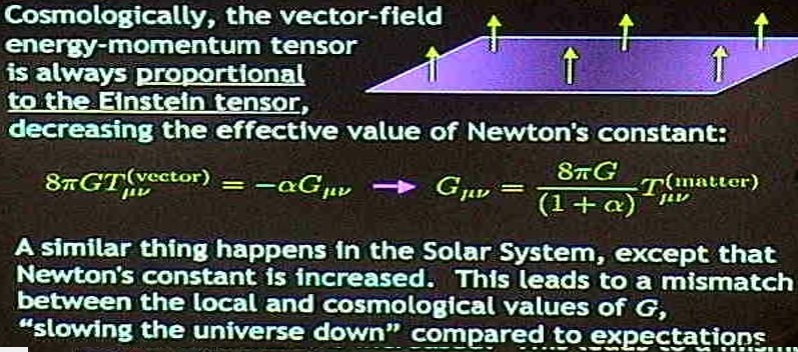

As both Newtonian and GR are related to the structure of this universe, Gravity can be defined by the S-function, as:

Gravity = G (S) = G (dark energy, dark mass, visible mass)

= G (dark energy) @ G (mass)

For G (mass), it has only one parameter, mass. This FACT shows that every ‘mass’ must interact with ALL other masses in THIS universe. That is, the Simultaneity Function can be defined by G (mass), that is,

G (mass) = Si (mass); G (mass) is a simultaneity function.

This Si function can be renormalized only if the gravity interaction transmits instantaneously. In fact, if the gravity of the Sun reaches Earth with light speed, it will not fit the reality. The Sun/Earth gravitational interaction is precisely described with Newtonian gravity law, which encompasses instantaneity.

So, for Sun/Earth gravity at least (if not for other cases), G (mass) should be the function of both {simultaneity and instantaneity}. Thus, we can define:

G (Sun/Earth) = G (mass, simultaneity, instantaneity)

For Newtonian gravity, the ‘masses’ are wrapped into two points, the ‘center of mass’ while the simultaneity and instantaneity are innate part of the equation.

For GR, the simultaneity and instantaneity are wrapped into the ‘spacetime sheet’. When mass interacts with the GR spacetime sheet, it transmits both simultaneously and instantaneously.

This kind of wrapping makes both gravity theories automatically incomplete, as effective theories at best. Now, Newtonian gravity is now viewed as wrong in terms of Occam’s razor, and thus it does the modern physics no harm. On the other hand, GR is still viewed as the Gospel on gravity, and it becomes the greatest hindrance for getting a correct gravity theory.

If GR did provide us some insights before, it is a long time ago past tense. The recent promotion about the greatness of the LIGO discovery will further drag us down the hellfire dungeon. LIGO indeed might provide some additional data to confirm what we already know, but it cannot rescue GR’s fate as a total trash. The following is just a short list of GR’s shortcomings.

One, GR plays zero role in the construction of quark/lepton.

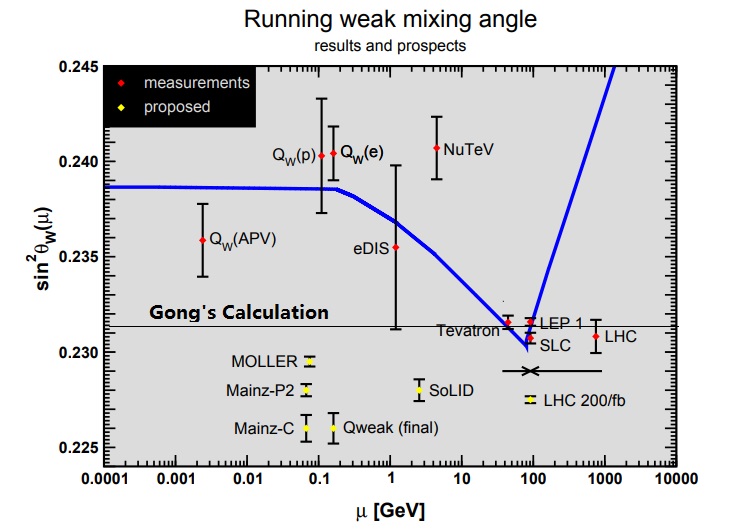

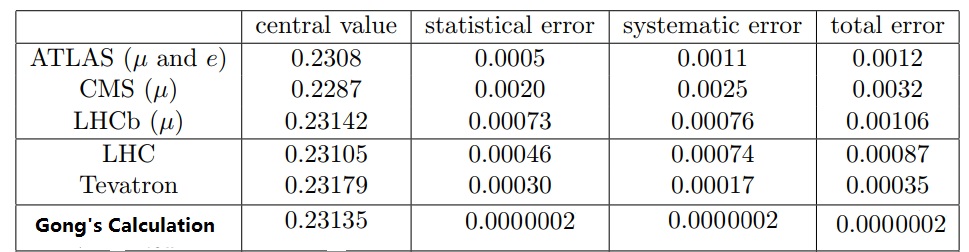

Two, GR plays zero role in calculating the nature constants, such as Alpha or Cabibbo/Weinberg angles, etc.

Three, GR fails to account for dark mass and dark energy, unable to derive the Planck CMB data.

(dark energy = 69.2; dark matter = 25.8; and visible matter = 4.82)

Neff = 3.04 +/- …

Hubble Constant: H0 (early universe) = 66.93 ± 0.62 km s−1 Mpc−1 (by Using ΛCDM with Neff = 3)

Four, GR provides no hint of any kind for the BaryonGenesis, which is definitely a cosmology issue, and this alone should give GR the death sentence.

Five, the last but not the least, GR is not compatible with QM (quantum mechanics).

More details on this, see https://medium.com/@Tienzen/yes-gr-is-very-successful-as-gravitational-lens-ff65efb63889 .

Yes, GR is of course a very EFFECTIVE gravity theory (as a great reverse-engineering work) but is definitely a wrong one for the correct theory. The GR wrapping which hides the essences of gravity (simultaneity and instantaneity) renders it unsalvageable and unamendable. That is, it is in fact the greatest hindrance for getting a correct gravity theory. So, GR is the other Arch-Demon for modern physics.

Here is the ArchAngel

All the calculations for those angel facts (of section D) are done in G-theory (Prequark Chromodynamics).

Superficially, Prequark model is similar to the Preon (Rishons) model, but there are at least four major differences between them.

One, the Rishons model has sub-quarks (T, V): {T (Tohu which means “unformed” in Hebrew Genesis) and V ( Vohu which means “void” in Hebrew Genesis)}. But, Harari did not know what T is (just being unformed). On the other hand, the A (Angultron) is an innate angle, a base to calculate Weinberg angle and Alpha, see http://prebabel.blogspot.com/2012/04/alpha-fine-structure-constant-mystery.html .

Two, the choosing of (T, V) as the bottom in the Rishons model was ad hoc, a result of reverse-engineering. On the contrary, there is a very strong theoretical reason for where the BOTTOM is in G-theory.

In G-theory, the universe is ALL about computation, computable or non-computable. For computable, there is a TWO-code theorem. For non-computable, there are 4-color and 7-color theorems.

That is, the BOTTOM must be with two-codes. Any lower level under the two code will become TAUTOLOGY, just repeating itself.

Anything more than two codes (such as 6 quarks + 6 leptons) cannot be the BOTTOM.

Three, rishons (T or V) carry hypercolor to reproduce the quark color, but this set up renders the model non-renormalizable, quickly going into a big mess. So, it was abandoned almost on day one. On the other hand, prequarks (V or A) carry no color, and the quark color arises from the “prequark SEATs”. In short, Rishons model cannot work out a {neutron decay process} different from the SM process.

This is one of the key differences between prequark and (Rishons and SM).

Four, Preon/Rishons model does not have Gene-colors which are the key drivers for the neutrino oscillations.

More details on those differences, see http://prebabel.blogspot.com/2011/11/technicolor-simply-wrong.html .

In addition to being theory to describe particles, G-theory also resolves ALL cosmologic issues which consists of only three:

One, the initial condition of THIS universe

Two, the final fate of THIS universe

Three, the BaryonGenesis mystery

BaryonGenesis determines the STRUCTURE of THIS universe, that is,

G (S) = G (dark energy, dark mass, visible mass)

= G (dark energy) @ G (mass)

So, BaryonGenesis must be the function of G (S), which is described as:

(dark energy = 69.2; dark matter = 25.8; and visible matter = 4.82)

The calculation of this Planck CMB date in G-theory uses the ‘mass-LAND-charge’, that is, all 48 fermions (24 matter and 24 antimatter) carry the same mass-land-charge while their apparent masses are different. And, MASS-pie of THIS universe is evenly divided among those 48 fermions. That is, the antimatter does in fact not disappear (not be annihilated) while it is invisible. See the calculation below. More details, see https://tienzengong.wordpress.com/2017/10/26/science-is-not-some-eye-catching-headlines/ .

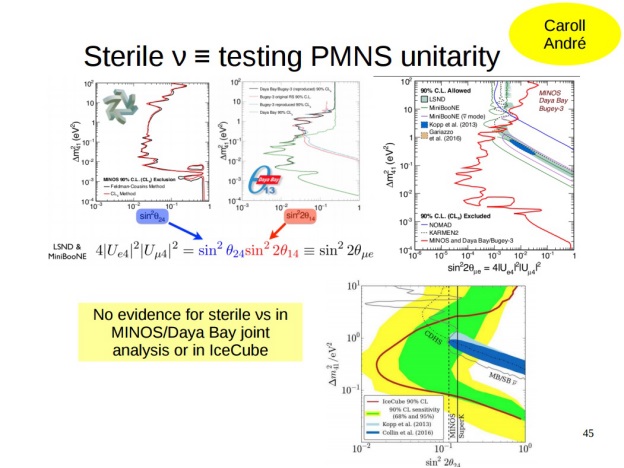

This BaryonGenesis of G-theory rules out the entire sterile dark sector (WIMPs, SUSY, sterile neutrino, axion, MOND, etc.) completely.

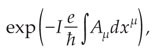

On November 8, 2017, Nature (Magazine) announced the death of WIMP, see http://www.nature.com/news/dark-matter-hunt-fails-to-find-the-elusive-particles-1.22970 .

This BaryonGenesis calculation must also link to the issues of {initial condition and the final fate}. And indeed, it does.

BaryonGenesis in fact has two issues.

One, where is the antimatter in THIS universe?

Two, why is THIS universe dominated by matter while not by antimatter?

The ‘One’ was answered with the above calculation.

The ‘Two’ can only be answered by ‘Cyclic Multiverse’.

However, for THIS universe goes into a ‘big crunch’ state, the omega (Ω) must be larger than 1, while it is currently smaller than 1. That is, there must be a mechanism to move (evolve) Ω from less than 1 to bigger than 1.

Again, only G-theory has such a mechanism, and it is not a separately invented but is a part of BaryonGenesis calculation, the ‘Dark Flow, W’.

This dark flow  prediction of the G-theory was confirmed in 2016, see https://tienzengong.wordpress.com/2017/05/15/comment-on-adam-riess-talk/ .

prediction of the G-theory was confirmed in 2016, see https://tienzengong.wordpress.com/2017/05/15/comment-on-adam-riess-talk/ .

G-theory of course accounts for the ‘initial condition’, see https://tienzengong.wordpress.com/2016/12/10/natures-manifesto-on-physics-2/ .

Army of the Archangel

Weinberg has been complaining about the Arch-Demon (Copenhagen doctrine) many times but without making any new proposal, see http://prebabel.blogspot.com/2013/01/welcome-to-camp-of-truth-nobel-laureate.html .

On the other hand, ‘t Hooft (Nobel Laureate) did embrace the G-theory from the point of Cellular Automaton Quantum-ness, see http://prebabel.blogspot.com/2012/08/quantum-behavior-vs-cellular-automaton.html . In 2016, he even published a book on it.

More details, see https://tienzengong.wordpress.com/2017/10/21/the-mickey-mouse-principle/ .

Sabine Hossenfelder just issued a death sentence for Naturalness (see http://backreaction.blogspot.com/2017/11/naturalness-is-dead-long-live.html ).

The death of Naturalness is a precursor for the death of Higgs Mechanism.

Steven Weinberg just revealed the death of M-string theory in his October 2017 video lecture.

Paul J. Steinhardt announced the death of ‘inflation cosmology’ in 2016.

Conclusion

The current hellfire nightmare of the mainstream physics did not start in 2012 but is the results of three demons {Copenhagen doctrine, GR and the Higgs mechanism}, began in 100 years ago. Fortunately, many angel facts (experimental data) have revealed their demon-faces. Finally, the ArchAngel (the G-theory) has come for the rescue. With the growing army of ArchAngel, the human physics’ salvation is now secured.